Analytical instruments used for online chemical analysis of process streams or plant environments are generally called process analyzers. On-stream analytical data have proven to be crucial to safe and efficient operation in the petroleum, chemical, pharmaceutical, pulp and paper, power and other industries. Historically speaking, these instruments have been complex, even temperamental, systems with relatively unique operational and maintenance needs. If online instrumentation, sample-handling systems and data-analysis software are to realize optimum performance, they will require continual attention from the analyzer support staff.

Increasingly, however, regulatory and high-priority economic concerns such as operator health and safety, emissions control and energy conservation are raising the importance of analyzer reliability to normal operations. Particularly with respect to regulatory and safety uses, the time logged as out-of-limits because of an analyzer outage can result in stiff fines.

In these situations, it’s important to be able to deal with routine maintenance needs, as well as to recognize and characterize maintenance needs that require more specialized skills. Sourcing such specialized skills and an expedited response to an incident frequently become high-priority items.

Looking back

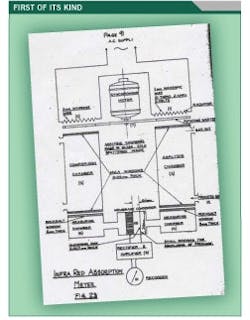

A brief overview of analyzer history can put the current situation into perspective. The technology for on-stream chemical analysis dates back about 70 years. The first nondispersive infrared (NDIR) photometers were developed and deployed in the late 1930s at the Ludwigshafen Research Lab of I.G. Farbenindustrie (German Chemical Trust later broken up by the Allied Occupation Forces into BASF, Bayer and Hoechst). A schematic of UltraRotAbsorptionSchreiber (URAS), the first on-stream analyzer, is shown in Figure 1. The URAS trade name belongs to the original manufacturer, Hartmann & Braun, which is now a unit of ABB, a leading worldwide analyzer supplier. When this work was discovered, its significance was recognized immediately (British Intelligence Operations Subcommittee Report #1007, 12 June 1946). The report states that “I.G. Farbenindustrie’s development in recent years of the infrared absorption meter and the magnetic oxygen recorder represent a great advance.”

Figure 1. This block diagram shows the major components

and configuration of the first on-stream analyzer.

U.S. chemical and petroleum companies began using on-stream analyzers in the 1950s. By 1960, Standard Oil of New Jersey’s (later Exxon) Baton Rouge Refinery had a significant complement of on-stream analyzers (Table 1).

| Analyzer | Installed Cost ($M) | Suppliers |

| Gas chromatograph | 10 to 15 | Beckman, Consolidated Electrodynamics, Greenbrier, Perkin Elmer |

| Colorimeter | 8 | Beckman |

| Densitometer | 8 | Precision Thermometer & Instrument |

| Final boiling point |

9 | Hallikainen, Precision Scientific, Technical Oil Tool Co. (TOTCO) |

| Flash point | 10 | Precision Scientific |

| Hydrogen sulfide (Pb acet tape) | 11 | Minneapolis Honeywell Rubicon |

| Ionization chamber (ppb gases) | 10 | Mine Safety Appliances |

| Initial boiling point | 5 to 8 | Hallikainen, TOTCO |

| Infrared (NDIR) | 8 to 12 | Beckman, Liston-Becker, Mine Safety Appliances |

| Moisture (electrolytic) |

7 | Beckman, Consolidated Electrodynamics, Mfrs Engineering and Equipment |

| Moisture (heat of adsorption) |

14 | Mine Safety Appliances |

| Differential refractometer | 6 to 12 | Consolidated Electrodynamics, Greenbrier |

| Reid vapor pressure (RVP) |

7 | Precision Scientific |

| Viscometer | 8 | Hallikainen |

| Ultraviolet | 10 | Analytic Systems Co. |

The emergence of real-time digital computers in the 1960s, followed by the microelectronics revolution and the large-scale integration microprocessor in the 1970s, eventually allowed exploitation of highly sophisticated analytical techniques for on-line analysis. These developments required several decades to develop. During the past 10 years or so, the full power of on-stream chemical analysis, combined with modern information technology, has taken hold throughout the process industries and is generating higher productivities, yields, efficiencies and product quality.

Realizing these benefits required highly skilled and experienced technical personnel. The analyzer community evolved into a culture suited to the care and tending of these useful industrial analyzer tools. The question facing plant operations management is: How do you realize the enormous potential benefits of on-stream analysis without the overhead of on-site analyzer specialists?

In the beginning, the computer was a highly specialized tool surrounded by a cadre of expert-practitioners. During the late 1950s, mere mortals never were allowed to approach the computational machines. The high priests who tended the main console, changed magnetic tape drives and otherwise managed the care and feeding of the electronic monster were the only humans allowed to have access to the air-conditioned inner sanctum. These operators accepted your deck of punched cards and, a day later, handed you a printout of your results or, more frequently, a memory-dump to help you with your program fault analysis. What happened between your two visits was known only to the Most High.

Ultimately, Microsoft and Intel turned Everyman into a high priest, but with a big difference. Today’s user needs to know very little about hard-core programming. The large software producers already have done it. Save for a very few exceptions, computer specialists rely on preprogrammed software tools that come with the operating system.

A big part of the challenge in early programming was trying to solve complex problems involving large data sets using machines that had tiny memories (2K to 32K). In today’s world of gigabytes, this challenge is a quaint recollection of only those who lived through it.

A digital parallel

Analyzers are now at a somewhat similar juncture. Plant operators need the data analyzers provide, but often can’t afford to dedicate a highly skilled individual to provide it.

The big questions are these: Are analyzers ready to cut the cord and fly without a lifeline? What steps should plant operations take to realize the benefits of analyzer-derived data while ensuring that the data flow maintains a high level of reliability?

Paul Pulicken is in charge of analyzer technology at BP’s Texas City Refinery as well as working with others in this technology at the company’s five U.S. refineries. With 23 years of analyzer experience in a variety of Petroleum and chemical processes, Pulicken is well positioned to offer direction in this field.

The Texas City Refinery, with roughly 1,500 analyzers, isn’t your typical manufacturing operation. Servicing these instruments with a staff of fewer than 40 people, however, is every maintenance manager’s analyzer challenge — in spades.

The No. 1 challenge, his experience shows, has been presenting a representative sample to the analyzer. This is a problem not only in the sample system’s fundamental design, but also in its continuing maintenance. Much effort is currently directed at standardizing and rationalizing sample system design and component interfacing, and assuring integrated data flow for sample-system troubleshooting and optimization. Traditionally, this activity has been long on experience and ad hoc decision-making, which leaves operating management vulnerable to key personnel reassignments.

Challenge No. 2 is the shortage of qualified project engineers and managers who have sufficient experience with modern analyzers. This situation often shortchanges the analyzer’s value as a provider of key process performance measures.

The third challenge is the lack of analyzer knowledge at the plant operations level. This translates to less-than-optimum use of analyzer data, as well as slower response to impending maintenance issues. The three chief challenges don’t even mention instrument problems.

In Pulicken’s view, education and training are the major needs. For instrument training, particularly with a new or advancing technology, he relies on the instrument manufacturer. For more system-level training, particularly with multiple vendors involved, he generally opts for training courses from analyzer systems engineering firms.

According to a recent worldwide process analyzer market study (PAI/2008), the process analyzer enterprise (annual expenditures for instruments, sample systems, installation/commissioning and maintenance) was almost $7 billion in 2008. The current economic malaise is likely to defer some, perhaps many, capital projects and cause substantial reductions in analyzer-related spending, but it’s unlikely to seriously reduce the value of the analyzer enterprise. This technology is now a deeply embedded, integral part of the safe and efficient operation of much of the world’s production capacity.

Terry McMahon, Steve Walton and Jim Tatera are principals at PAI Partners, Leonia, N.J. E-mail them at [email protected], [email protected] and [email protected].

Resources for continuing education

The typical training courses Technical Automation Services Corp. (TASC, www.tascorp.com) conducts include advanced topics in gas chromatography, basic industrial chemistry, improving sample system reliability, insight into continuous emission monitoring, introduction to process gas chromatography and troubleshooting sample systems. Other analyzer specialists offer similar programs, and analyzer manufacturers offer extensive training on their products. Other professional training sources include ISA’s Analysis Division (www.isa.org/ad), the International Forum on Process Analytical Chemistry (www.ifpac.com) and the Center for Professional Development (www.cfpd.com).