Keith Mobley said it succinctly in his November 2002 column: "Effective preventive maintenance requires reliable, accurate instrumentation and gauges." An element of preventive maintenance is regularly testing and calibrating process instrumentation.Test and calibration devices generally fall into three grades: industrial, instrument and laboratory (Table 1). Laboratory-grade equipment is used under controlled, stable environmental conditions. Industrial-grade devices are applied broadly for testing or monitoring, usually with whole-number resolution only. Instrument-grade equipment is used for tolerance validation, testing and calibration. This grade has at least four times the accuracy of the instrument to be calibrated or tested, typically with full-scale temperature compensation.

Accuracy varies

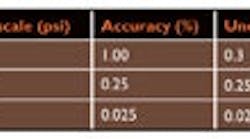

The accuracy specification refers to the degree of uncertainty associated with a measurement. Each manufacturer expresses accuracy in a format favorable to its equipment, which makes comparison a challenge.Accuracy specifications are stated as full-scale, range, percent of scale, or percent of reading or indicated value, and may include temperature compensation. For digital instrumentation, counts or digits are often part of the specification. To interpret these varied descriptions of accuracy, calculate the magnitude of the measurement uncertainty. For example, a pressure transmitter has a range of 0 to 30 psi and an overall accuracy spec of 1% of full scale. The uncertainty in the measurement is 0.3 psi throughout the entire range (1% of 30 psi). For example, at 15 psi, the true measurement can be anywhere between 14.7 psi and 15.3 psi.Table 1. Test and calibration equipment can be graded according to its accuracy and use.

|

View related content on PlantServics.com |

Determine requirements

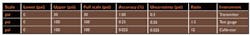

In practice, many companies have adopted a minimum 4:1 ratio: The measurement standard must be four times as accurate as the unit under test, for the given range.The measurement standard range must be as close as possible to the unit under test. Compare the accuracy at points throughout the measurement range.For example, compare the transmitter above , 0 to 30 psi, 1% full-scale accuracy and a 4:1 accuracy ratio , to an industrial-grade test gauge with a 0 to 100 psi range and 0.25% full-scale accuracy. (Comparing only full-scale accuracy, one might guess the test gauge itself can be used as a calibration standard.) Just for fun, let's compare a dedicated, instrument-grade hand-held pressure calibrator, also having a range of 0 to 100 psi, but with 0.025% full-scale accuracy.Remember that the measurement uncertainty must be applied to the full scale , 0 to 100 psi , in both cases. Table 2 illustrates the results. The test gauge, with a 1.2:1 accuracy ratio, is inappropriate for a calibration standard, but is appropriate for a test or indicator gauge. The dedicated hand-held pressure calibrator has an uncertainty of 0.025 psi and a 12:1 accuracy ratio and is an appropriate calibration standard.Now, select a more accurate transmitter with, say, 0.1% full-scale accuracy over the same range. Will that hand-held pressure calibrator still be appropriate? Table 3 shows that the dedicated hand-held calibrator is not an appropriate calibration standard if a 4:1 accuracy ratio is required. In fact, if the calibrator accuracy was 0.01%, it would still provide only a 3:1 accuracy ratio. But a hand-held calibrator with the same scale as the unit under test is an appropriate standard.The calibration procedure should reflect the appropriate standard clearly so these calculations need to be performed only once.Table 2. With an accuracy ratio of only 1.2:1, the test gauge is not an appropriate calibration standard for this transmitter. But at 12:1, the calibrator exceeds the 4:1 minimum and can be used.

Want to document?

Smart field devices constitute a significant portion of the installed base, and an even higher percentage of new instrument sales. In terms of installed base and annual sales, HART devices make up the majority of smart devices. These microprocessor-based units have been around for more than a decade, yet the documenting process calibrator is a relatively new product. The device is a portable, intelligent field calibrator, which reduces calibration time. Calibrating a HART device typically requires a digital voltmeter to monitor the 4-20 mA output, a HART host communicator and a calibrator. With a documenting process calibrator, one unit performs the entire procedure. The typical documenting process calibrator has:- Multiple bays for different calibration standards.

- Some digital voltmeter capability.

- Dedicated memory for storing calibration results.

- Communication with smart transmitters.

- Dedicated memory for storing device configurations.

- Communication with a device-management software tool.

Table 3. In this example, a calibrator with 0.01% full-scale accuracy is not good enough to calibrate a transmitter with 0.1% full-scale accuracy unless the calibrator's range does not exceed the transmitter's.

Modular versus all-in-one

If you have only one calibrator to serve a variety of devices, and you don't mind sending it back to the factory, the all-in-one unit may be for you.However, if you don't have a variety of devices to calibrate, or you have a number of technicians who share equipment, then the modular or dedicated approach may better suit your needs. With a modular approach, the calibration standards may be shared among base units, so you buy only the sensor types you require.Perhaps the strongest argument for a modular calibrator is that it facilitates a broader array of sensor ranges better matched to your devices, so you can maintain the 4:1 accuracy ratio.Device management

A typical field device calibration cycle consists of pre-calibration planning, performing the calibration, and post-calibration documentation. Pre-calibration planning includes gathering the calibration procedure and related documentation, locating the correct calibration standard, printing the calibration sheets, finding a DVM, a Hart Host communicator and a pen. Calibration consists of determining the tolerance (as-found, across the entire range), adjusting it and verifying it (as-left). Post-calibration documentation includes reviewing and recording the calibration results, acquiring necessary signoffs, updating an audit trail and filing the results.In many cases, the data is transferred manually to an Excel or Access file and printed in a standard format. The entire operation is time-consuming. Some organizations even remove field devices from service for bench calibration, which significantly extends the time it takes to calibrate a field device.A documenting process calibrator, on the other hand, reduces the time devoted to calibration by providing the required equipment in one compact package. Coupling it with device-management software gains additional efficiency.The "electronic birth certificate" is the initial entry in a device-management software package, and includes the device's configuration and calibration starting point. The tolerance and calibration frequency may be included in the record. When the device is due for calibration, it's automatically scheduled and the appropriate data are downloaded into the documenting process calibrator.The documenting process calibrator then becomes a personal digital assistant containing a list of devices scheduled for calibration, the procedures and necessary calibration standards, a digital voltmeter and, if you chose, a built-in Class 5 HART Host.The documenting process calibrator walks the technician through the calibration procedure, electronically capturing and evaluating each as-found point for tolerance verification. If the device is in tolerance across its entire range, the data may be saved as-left. If not, the calibrator can execute any selected trim , zero offset, upper and lower range values, 4 mA and 20 mA , provided you have built-in Class 5 HART Host. Once the devices are calibrated, the unit is reconnected to the device-management software and field data is transferred as an electronic "bill of health." Re-ranging or calibration that changes the configuration is also recorded and transferred, minimizing the post-calibration work to a couple of keystrokes.The user who couples a documenting process calibrator to a device-management software package can calibrate more devices during a given outage with the same manpower , by working smarter.Table 4. Typical instrument prices escalate with accuracy and complexity.

Connectivity

ISA and a number of instrumentation vendors released a Field Calibrator Interface standard that applies to downloading test procedures into the documenting process calibrator and uploading the test results to a device-management software package. The document can be found at www.isa.org/~pmcd/FCTC/FieldCalbrIntf/FCINTF.PDF.Some questions to ask when purchasing a device-management system include:- Is there a need to communicate with the plant automation system?

- Is there a need to communicate with a computerized maintenance management system?

- Is there a need to communicate with field devices and, if so, what hardware and software is required?

- Does the device-management system handle both calibration and configuration?

The last point is important for two reasons. Some devices may change configuration slightly when calibrated, and in the event of a device failure, having the latest configuration simplifies cloning.

Scalability and cost

The test and calibrator market is wide and varied. Industrial-grade devices are limited primarily to test functions and generally cost less than $1,000. Instrument-grade devices cost from $500 to several thousand dollars, depending on options. Laboratory instruments, while having the highest accuracy, also have a high price and tight environmental constraints , something not everyone can afford (Table 4).Effective preventive maintenance dictates testing and calibrating instrumentation regularly. The trend toward field calibration has produced significant savings and reduced downtime. Manufacturers of test and calibration equipment responded by designing portable, intelligent, field calibrators that range from small, dedicated purpose, hand-held units to multi-function testers and documenting process calibrators. A complete device-management system links it all to a calibration and configuration-management software package. Louis Szabo is vice president of marketing and sales for Meriam Process Technologies. Contact him at [email protected] and (216) 928-2232.Sponsored Recommendations

Sponsored Recommendations

April 14, 2025

April 14, 2025

April 14, 2025

Most Read

Most Read